About me

I'm a second year PhD student advised under professor Atlas Wang . I work on Graph Neural Network, Efficient Learning, Deep Neural Network sparsity, Mixture of Experts and Sparse Neural Network. But sometime I also dapple a little bit into LLM, and Computer Vision.

Outside of research and studies, I enjoy doing human things like reading, photography and crossfit. Sometime, you can catch me outside during day-light, be sure to approach slowly, I am easily spook.

What i'm doing

-

Researching

Researching and developing state-of-the art methods in Machine Learning and Artificial Intelligence.

-

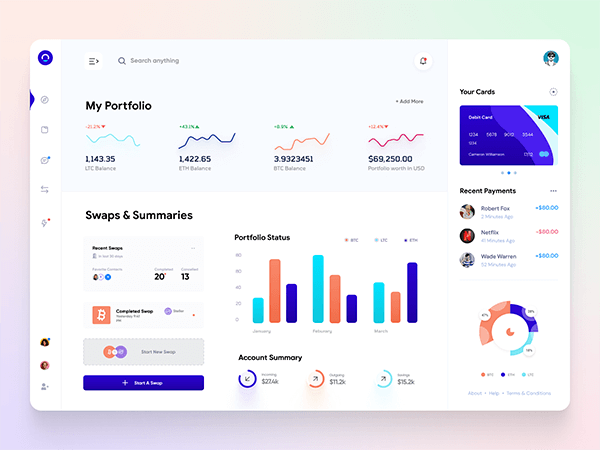

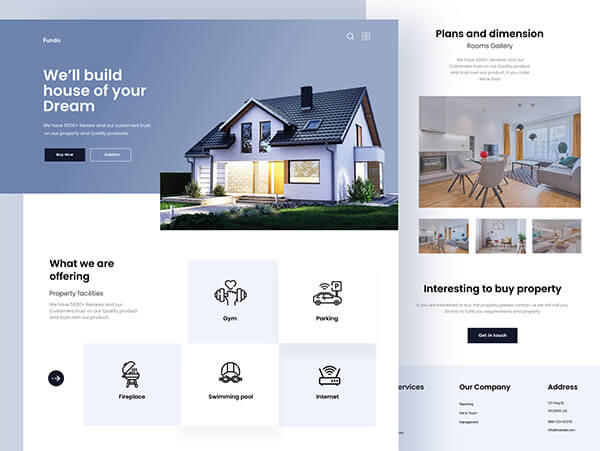

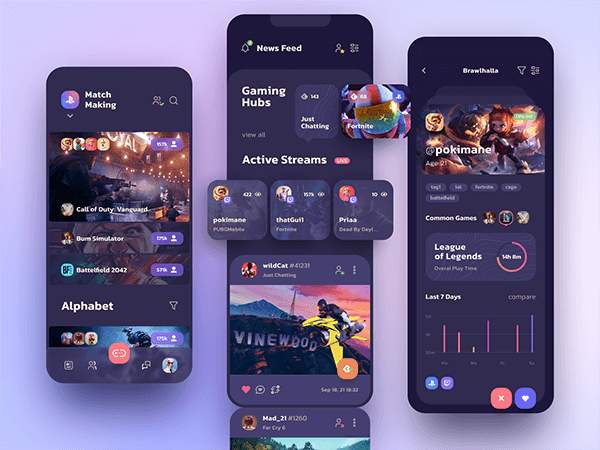

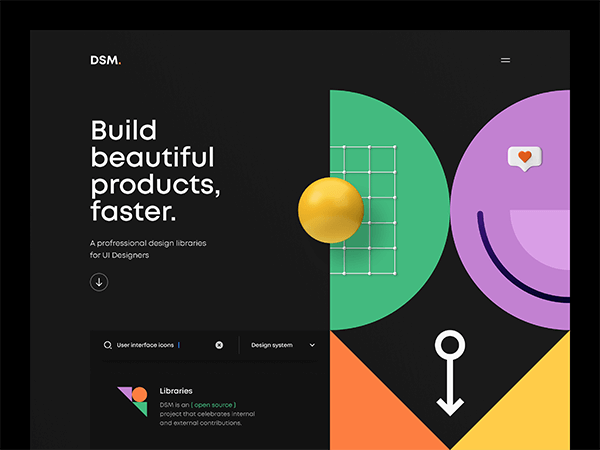

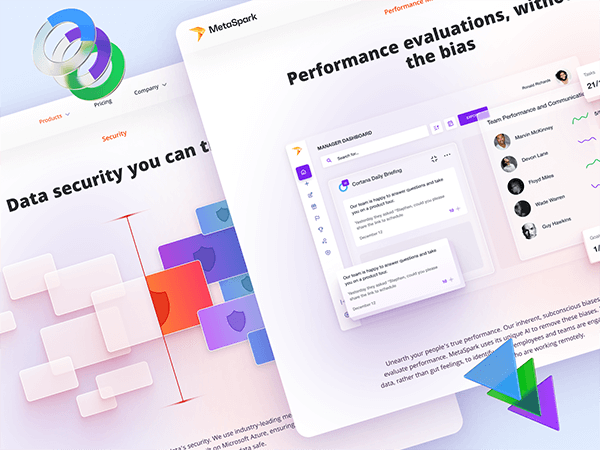

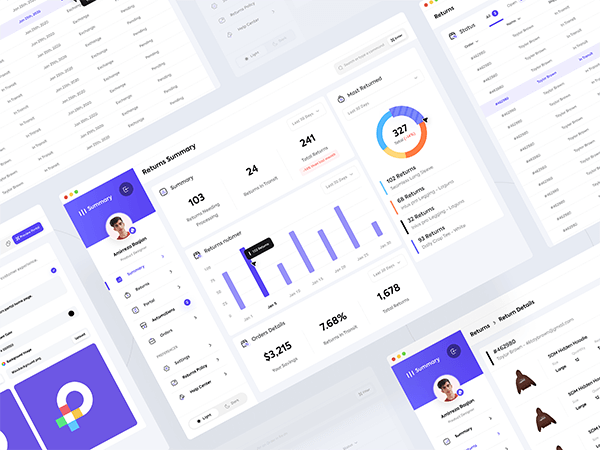

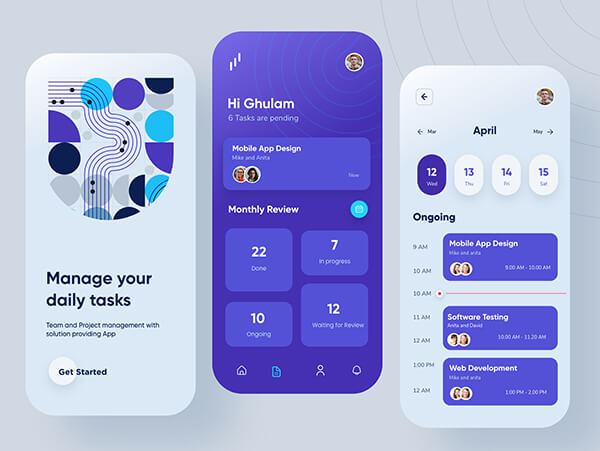

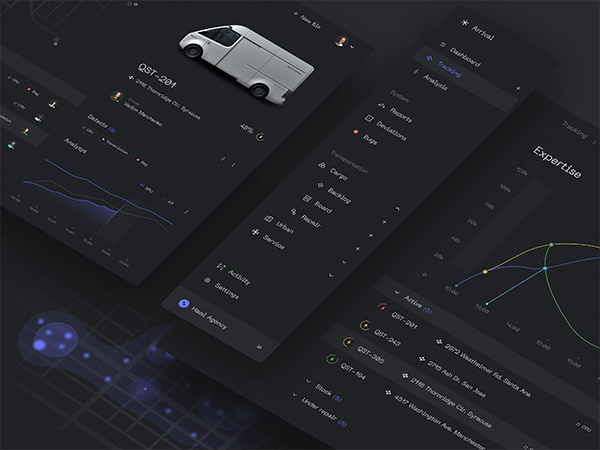

Application development

Working with industry to bring research ideas into working applications.

-

Photography

I am passionate about photography. I take nature and street photos mostly.